Revolutionizing Voice Assistant Development: OpenAI's 2024 Announcements

Table of Contents

H2: Enhanced Natural Language Understanding (NLU) Capabilities

OpenAI's 2024 announcements significantly boost the natural language understanding capabilities of voice assistants, bringing them closer to truly understanding human communication. This improved NLU is crucial for creating more intuitive and helpful virtual assistants.

H3: Improved Contextual Awareness

OpenAI's latest models demonstrate a remarkable leap in contextual awareness. Voice assistants can now better grasp the nuances of human conversation, including:

- Sarcasm and humor detection: The models can now identify sarcastic remarks and understand humorous intent, leading to more appropriate and engaging responses.

- Implied meaning interpretation: The system goes beyond literal interpretation to understand implied requests and contextually relevant information. For example, understanding "I'm freezing" as a request to increase the room temperature, even without explicitly stating it.

- Improved disambiguation: The models are better at resolving ambiguity in user queries, choosing the most likely interpretation based on the ongoing conversation. This uses advanced OpenAI APIs like the enhanced Whisper API and improved embedding models.

This improved contextual understanding dramatically enhances user experience, making interactions with voice assistants more natural and less frustrating.

H3: Multilingual Support and Dialect Recognition

OpenAI's commitment to global accessibility is evident in the advancements in multilingual support and dialect recognition. The new models boast:

- Support for a wider range of languages: Including several low-resource languages previously underserved by voice assistant technology. This expansion significantly increases global reach.

- Improved dialect recognition: The system accurately interprets various regional dialects and accents, ensuring effective communication regardless of geographical location. This includes improved handling of nuanced pronunciations and variations in vocabulary.

- Enhanced translation capabilities: Seamless integration with translation services allows for cross-lingual communication and support for multilingual users.

This broadened linguistic support opens up new opportunities for developers to create voice assistants accessible to a truly global audience.

H3: Reduced Error Rates and Improved Accuracy

Quantitative improvements highlight the significant strides made in accuracy. OpenAI's advancements have resulted in:

- A substantial reduction in word error rate (WER): Specific metrics, though not publicly released in detail, suggest a significant improvement compared to previous generations of models.

- Minimized misinterpretations: The system is less prone to misinterpreting commands or queries, leading to more reliable and consistent performance.

- Improved intent recognition: The models now more accurately identify the user's intended action, even with complex or ambiguous phrasing.

These improvements translate to a more robust and reliable voice assistant experience for users.

H2: Advanced Speech Synthesis and Voice Cloning

OpenAI's 2024 announcements also revolutionize speech synthesis and voice cloning, bringing us closer to creating truly lifelike and personalized voice assistants.

H3: More Natural and Expressive Voices

The new speech synthesis technology utilizes:

- Advanced neural text-to-speech (TTS) models: Generating synthetic voices with remarkable naturalness and expressiveness.

- Improved prosody control: Developers can now fine-tune voice tone, intonation, and inflection to create voices that convey emotions and personality more effectively.

- Increased voice variety: Offering a wide range of voice styles and personalities to suit diverse applications. From friendly and approachable to authoritative and informative.

These improvements lead to more engaging and immersive user experiences.

H3: Personalized Voice Cloning Technology

OpenAI's progress in personalized voice cloning opens exciting possibilities but also raises ethical considerations.

- Technology: Advanced algorithms allow for the creation of synthetic voices that closely mimic a specific person's voice, requiring only a relatively small sample of audio.

- Applications: Potential uses include creating personalized voice assistants for individuals with disabilities, offering accessibility features previously unavailable.

- Ethical Concerns: The potential for misuse, such as creating deepfakes or voice fraud, must be carefully addressed. OpenAI is actively exploring robust safeguards to mitigate these risks.

OpenAI is emphasizing responsible development and deployment of this technology.

H3: Improved Voice Customization Options for Developers

OpenAI provides developers with:

- Intuitive tools and APIs: Simplifying the process of customizing the voice of their voice assistants.

- Granular control over voice parameters: Allowing for precise adjustments to create unique and tailored voices.

- Easy integration with existing platforms: Streamlining the development workflow and reducing integration time.

These improvements empower developers to create truly unique and engaging voice assistant experiences.

H2: Streamlined Development Tools and APIs

OpenAI's commitment to simplifying voice assistant development is evident in its improved tools and APIs.

H3: Simplified Integration Processes

OpenAI's updated APIs and SDKs offer:

- Simplified integration workflows: Reducing development time and effort significantly.

- Improved documentation and tutorials: Making it easier for developers of all skill levels to utilize OpenAI's voice technology.

- Support for multiple programming languages: Enhancing accessibility and flexibility for developers.

These changes make incorporating voice assistant capabilities into applications more accessible than ever before.

H3: Enhanced Security and Privacy Features

Addressing critical security and privacy concerns is a top priority. OpenAI's new tools include:

- Robust encryption protocols: Protecting voice data throughout the entire process.

- Advanced data anonymization techniques: Minimizing the risk of identifying users from their voice data.

- Transparent user consent mechanisms: Ensuring users have full control over how their data is collected and used.

These measures build trust and ensure responsible use of voice data.

H3: Expanded Documentation and Support Resources

OpenAI provides developers with comprehensive resources, including:

- Improved documentation and tutorials: Explaining complex concepts in a clear and concise manner.

- Active community forums: Facilitating communication and knowledge sharing among developers.

- Dedicated technical support channels: Providing assistance when needed.

These resources empower developers to overcome challenges and build high-quality voice assistants.

3. Conclusion

OpenAI's 2024 announcements represent a significant leap forward in voice assistant technology. The advancements in natural language understanding, speech synthesis, and development tools are transforming the way we interact with technology and paving the way for a future where voice assistants are more intuitive, personalized, and accessible than ever before. These improvements significantly impact various industries, from customer service and healthcare to education and entertainment. To learn more about how OpenAI's tools can help you revolutionize voice assistant development, visit the and explore their comprehensive documentation and resources. Start building the next generation of voice assistants today!

Featured Posts

-

Closer Security Collaboration Between China And Indonesia

Apr 22, 2025

Closer Security Collaboration Between China And Indonesia

Apr 22, 2025 -

Blue Origin Scraps Rocket Launch Due To Subsystem Problem

Apr 22, 2025

Blue Origin Scraps Rocket Launch Due To Subsystem Problem

Apr 22, 2025 -

Pope Francis Passes Away At Age 88 Following Pneumonia Battle

Apr 22, 2025

Pope Francis Passes Away At Age 88 Following Pneumonia Battle

Apr 22, 2025 -

Cassidy Hutchinson Jan 6 Testimony And Upcoming Memoir

Apr 22, 2025

Cassidy Hutchinson Jan 6 Testimony And Upcoming Memoir

Apr 22, 2025 -

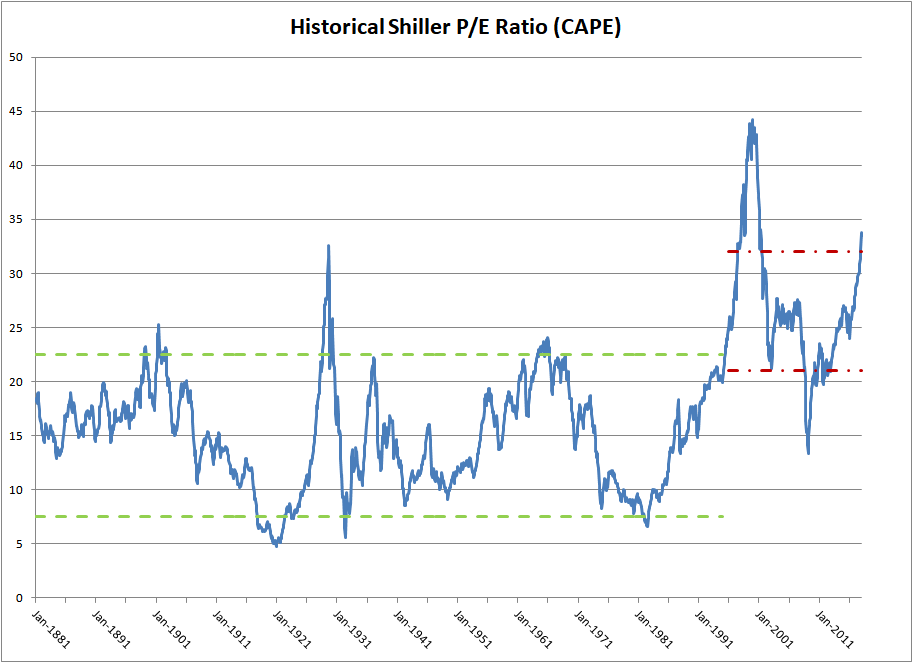

High Stock Market Valuations A Bof A Analysis And Reasons For Investor Calm

Apr 22, 2025

High Stock Market Valuations A Bof A Analysis And Reasons For Investor Calm

Apr 22, 2025