Build Your Voice Assistant Easily With OpenAI's New Tools (2024)

Table of Contents

Understanding OpenAI's Relevant APIs for Voice Assistant Development

Building a robust voice assistant requires a suite of powerful tools, and OpenAI provides just that. This section outlines the key APIs crucial for your voice assistant project, explaining their functionalities and how they seamlessly integrate. Understanding these APIs is the foundation for building a successful voice-controlled application.

-

Whisper API: This API is your gateway to converting spoken words into text. Whisper's advanced speech-to-text capabilities, significantly improved in 2024, offer high accuracy even with noisy audio input. Its multilingual support allows you to create voice assistants capable of understanding various languages. The improved accuracy and efficiency of Whisper in 2024 make it an ideal choice for voice assistant development.

-

GPT-4 API: The heart of your voice assistant's intelligence lies within GPT-4. This powerful natural language processing model allows your assistant to understand the context of user requests, generate human-like responses, and even engage in complex conversations. GPT-4's ability to understand nuances and intent is crucial for building a truly interactive voice experience.

-

Embeddings API: For enhanced contextual awareness and semantic search, the Embeddings API is invaluable. It allows your voice assistant to understand the meaning behind user requests, even if they are phrased differently. This feature greatly improves the accuracy and responsiveness of your voice assistant.

-

Integration with other OpenAI services: The power of OpenAI extends beyond these core APIs. Consider integrating DALL-E for generating images based on voice commands, giving your voice assistant even more capabilities. This level of integration creates a truly versatile and innovative voice experience.

Step-by-Step Guide: Building a Basic Voice Assistant with OpenAI

Let's build a simple voice assistant! This section provides a practical, step-by-step tutorial, complete with code snippets and clear explanations. Even beginners can follow along and create their own functional voice assistant.

-

Setting up your development environment: Begin by setting up your Python environment. You'll need to install the necessary OpenAI and speech-related libraries (

openai,whisper, a suitable text-to-speech library). -

Making API calls using Python libraries: The OpenAI Python library simplifies interaction with the APIs. You'll learn how to make requests, handle responses, and manage API keys securely.

-

Implementing speech-to-text functionality with the Whisper API: Use the Whisper API to transcribe audio input from a microphone into text, forming the basis of user input for your assistant.

-

Processing user input with the GPT-4 API: Send the transcribed text to the GPT-4 API to analyze the user's request and generate an appropriate response.

-

Generating and delivering responses via text-to-speech (TTS): Integrate a text-to-speech library (like

gTTSor a commercial TTS service) to convert the GPT-4 response back into spoken audio for the user. -

Example Code Snippets:

import openai

import whisper

# ... (API key setup and other imports) ...

model = whisper.load_model("base") # Load Whisper model

result = model.transcribe("audio.wav") # Transcribe audio

user_input = result["text"]

response = openai.Completion.create(

engine="text-davinci-003", # Or a suitable GPT model

prompt=f"User: {user_input}\nAssistant:",

max_tokens=150,

n=1,

stop=None,

temperature=0.7,

)

assistant_response = response.choices[0].text.strip()

# ... (TTS conversion and output) ...

Advanced Features and Customization Options

Once you have a basic voice assistant working, the possibilities for expansion are vast. This section explores advanced features and customization options to enhance its functionality and personalize the user experience.

-

Personalizing the assistant's responses: Use user profiles to tailor responses to individual preferences and past interactions.

-

Adding context awareness: Maintain conversation flow by storing and referencing previous interactions, enabling more natural and engaging dialogues.

-

Integrating with smart home devices: Control smart lights, play music, and perform other home automation tasks via voice commands. This integration significantly expands the utility of your voice assistant.

-

Using embeddings for improved semantic search: Enhance the understanding of user intent by utilizing embeddings to analyze the semantic meaning of their requests, even if phrased differently.

-

Implementing error handling and fallback mechanisms: Handle unexpected inputs and API errors gracefully to ensure a robust and reliable voice assistant.

Overcoming Challenges and Troubleshooting Common Issues

Building any application comes with challenges. This section addresses common problems encountered during voice assistant development and offers solutions.

-

Dealing with noisy audio input: Implement noise reduction techniques or use advanced speech recognition models to handle noisy environments.

-

Handling ambiguous user requests: Utilize techniques like intent recognition and clarification prompts to address vague requests.

-

Managing API rate limits and costs: Monitor API usage and optimize your code to stay within rate limits and minimize costs.

-

Troubleshooting API errors: Learn how to interpret and address API error messages effectively for debugging.

-

Optimizing performance for faster response times: Optimize code and API calls to reduce latency and improve responsiveness.

Conclusion

This guide provided a comprehensive overview of how to easily build your own voice assistant using OpenAI's powerful and accessible tools. We explored key APIs like Whisper and GPT-4, walked through a step-by-step development process, and discussed advanced features and troubleshooting techniques. Building a voice assistant is now more accessible than ever before.

Call to Action: Ready to bring your voice assistant vision to life? Start building your own voice assistant today using OpenAI’s innovative tools and unlock a world of possibilities. Explore the OpenAI API documentation and begin your journey in voice assistant development! Don't wait – embrace the future of voice interaction with OpenAI and create your own intelligent voice assistant.

Featured Posts

-

Emerging Markets Rally While Us Stocks Slump

Apr 24, 2025

Emerging Markets Rally While Us Stocks Slump

Apr 24, 2025 -

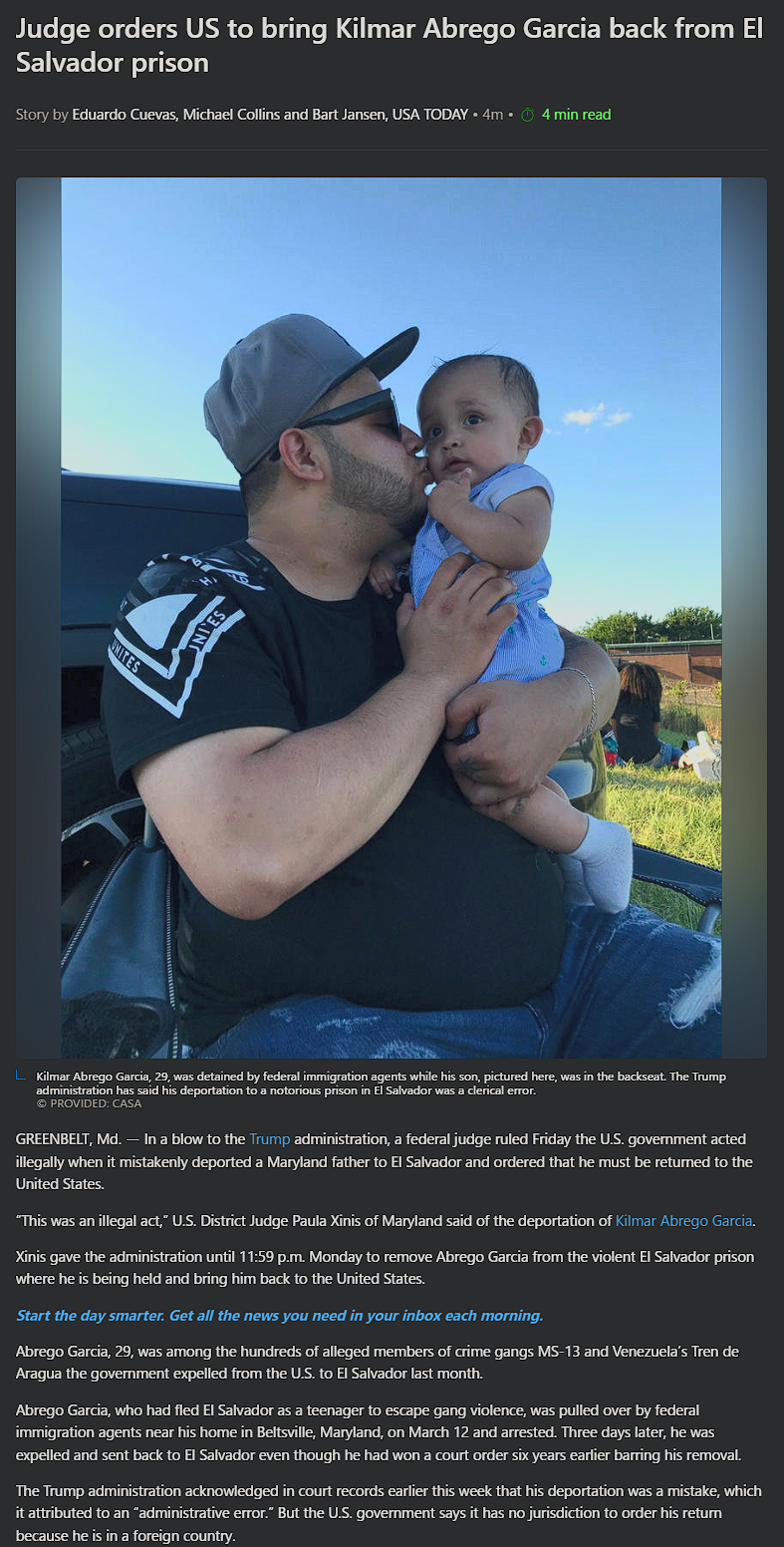

Judge Abrego Garcias Warning Stonewalling In Us Legal Cases Must End

Apr 24, 2025

Judge Abrego Garcias Warning Stonewalling In Us Legal Cases Must End

Apr 24, 2025 -

John Travoltas Shocking Rotten Tomatoes Record A Deep Dive

Apr 24, 2025

John Travoltas Shocking Rotten Tomatoes Record A Deep Dive

Apr 24, 2025 -

Trumps Assurance Powell Remains Fed Chair For Now

Apr 24, 2025

Trumps Assurance Powell Remains Fed Chair For Now

Apr 24, 2025 -

Ted Lassos Resurrection Brett Goldsteins Thought Dead Cat Analogy

Apr 24, 2025

Ted Lassos Resurrection Brett Goldsteins Thought Dead Cat Analogy

Apr 24, 2025